idtracker.ai#

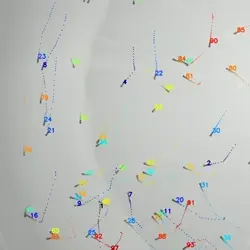

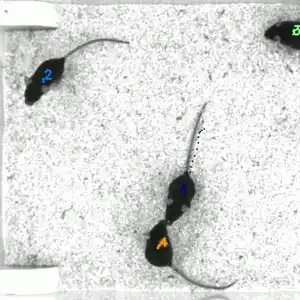

idtracker.ai tracks up to 100 unmarked animals from videos recorded in laboratory conditions using artificial intelligence. Free and open source.

Romero-Ferrero, F., Bergomi, M.G., Hinz, R.C., Heras, F.J.H., de Polavieja, G.G., idtracker.ai: tracking all individuals in small or large collectives of unmarked animals. Nature Methods 16, 179 (2019) [PDF, arXiv]

The data used in this article can be found in this repository together with videos, their optimal tracking parameters and the resulting tracked sessions.

Source Code

The code is open and accessible at polavieja_lab/idtrackerai, feel free to contribute.

Contact

If you encounter any problem or doubt, contact us at idtrackerai@gmail.com.

Founded by Fundação Champalimaud and FCT under project PTDC/BIA-COM/5770/2020